AI is used to predict the Synthesis of Complex New Materials – “Materials that no Chemist could Predict.

AI machine learning provides a roadmap for defining new materials for any purpose, which has implications for green energy and waste reduction.

Every year, scientists and institutions invest more in discovering new materials that can fuel the world. Researchers are increasingly looking to nanomaterials to increase the value of high-performance products and reduce natural resource depletion.

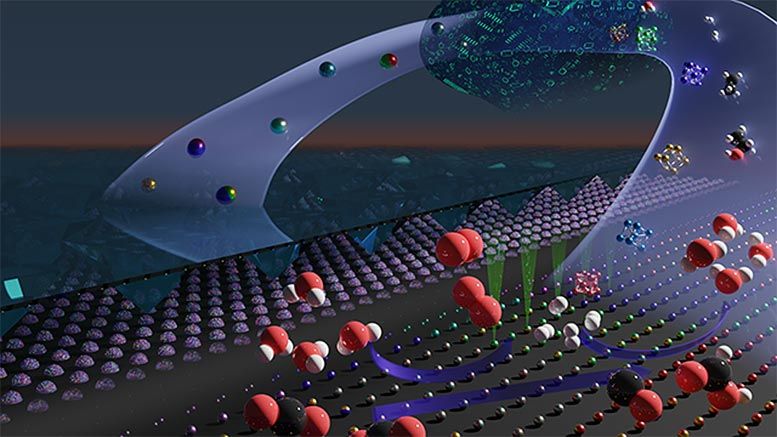

Nanoparticles are already being used in various applications, including energy storage and conversion, quantum computing, and therapeutics. However, the eternal nature of nanochemistry’s compositional and structural flexibility makes it difficult to discover new materials.

Researchers at Northwestern University (TRI) and the Toyota Research Institute have successfully used machine learning to guide the synthesis of new nanomaterials. This eliminates the barriers that can be associated with material discovery. The highly skilled algorithm analyzed data to predict new structures that could be used in the automotive, chemical, and clean energy industries.

Chad Mirkin (a Northwestern expert in nanotechnology and the paper’s co-author) said that the model was asked to predict what combinations of up to seven elements would create something new. “The machine predicted 19 possibilities, and after testing each one experimentally, we found that 18 were correct.”

The paper, “Machine Learning-Accelerated Design and Synthesis of Polyelemental Heterostructures,” will appear in the December 22 issue of Science Advances.

Mirkin is the George B. Rathmann professor of Chemistry at the Weinberg College of Arts and Sciences. He is also a chemical and biomedical engineering professor and materials science and technology at the McCormick School of Engineering. He is also a professor of medicine at the Feinberg School of Medical. Mirkin is also the founder of the International Institute for Nanotechnology.

The mapping of the material genome

Mirkin says this is because of the unprecedentedly large and high-quality datasets available. According to Mirkin, machine learning models and AI algorithms can only be made as good as the data they are trained on.

Mirkin invented the data-generation tool called “Mega library, ” dramatically expanding one’s field of view. The mega library contains millions, if not billions, of nanostructures with slightly different shapes, structures, and compositions. They are all placed on a 2-by-2 centimeter chip. Each chip has more inorganic material than scientists have ever collected or categorized.

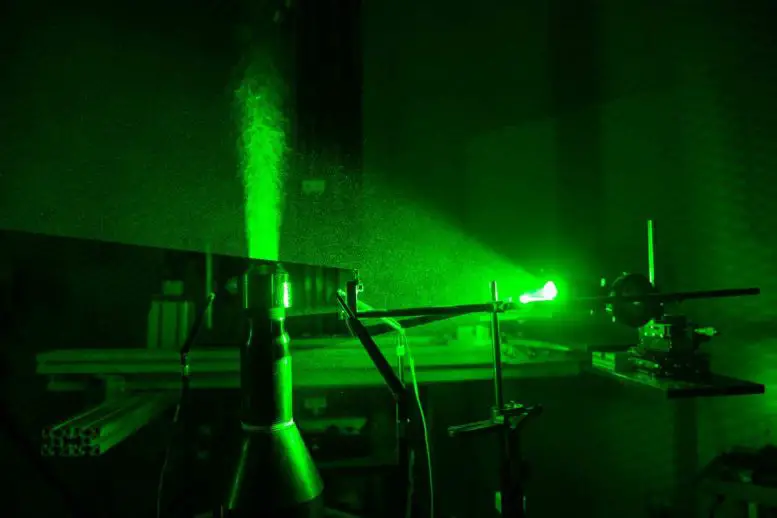

Mirkin’s team created the Megalibraries using a technique also invented by Mirkin, polymer pen lithography. This is a massively parallel tool for nanolithography that allows the site-specific deposition and repositioning of hundreds of thousands of features per second.

Scientists were tasked with identifying four bases when mapping the human genome. The loosely referred to “materials genome” encompasses nanoparticle combinations of any one of the 118 elements of the periodic table. It also includes parameters such as shape, size, and phase morphology. Researchers will be closer to creating a complete map of genomic materials by building smaller subsets.

Mirkin stated that even if there is a “genome,” Mirkin says it takes different tools to identify how to use and label the materials.

Mirkin stated, “Even though we can make materials quicker than anyone on Earth, that’s still just a droplet in the ocean full of possibility.” Artificial intelligence is the best way to determine and mine the material genome.

Machine learning applications are well-suited for the complex task of mining and defining the material’s genome. However, they can be gated by the ability to create datasets that can be used to train algorithms in this space. Mirkin stated that Megalibraries and machine learning could finally solve this problem. This will allow a better understanding of the parameters that drive specific material properties.

“Materials that no chemist could have predicted”

Megalibraries are a map; machine learning is the legend.

Mirkin says that Megalibraries can provide high-quality, large-scale materials data to train artificial intelligence (AI). This allows researchers to move beyond the “keen chemical intuition,” serial experimentation, and “keen” associated with the materials discovery process.

Mirkin stated that Northwestern had both the synthesis and state-of-the-art characterization capabilities necessary to determine the structure of the materials it generates. We worked closely with TRI’s AI team to create data inputs that would allow the AI algorithms to make these predictions about materials that no chemist could have predicted.

The team used Mega library structural data previously generated to compile the study. This Mega library data includes nanoparticles of complex compositions, structures, and sizes, as well as morphologies. This data was used to train the model. They asked it to predict combinations of four, five, and six elements that would lead to a particular structural feature. The machine learning model correctly predicted new materials 18 times in 19 predictions — an accuracy rate of approximately 95%.

The model used only training data and had little to no knowledge of chemistry and physics. It was capable of accurately predicting complex structures that never existed on Earth.

According to Joseph Montoya (senior research scientist at TRI), “These data suggest that the application of machine learning, combined with Mega library tech, maybe the way to finally defining materials genome.”

The industrially critical reactions of hydrogen evolution, carbon dioxide (CO 2) reductions, and oxygen reduction and development are all possible with metal nanoparticles. This model was trained using a large dataset from Northwestern to search for multi-metallic particles with defined parameters such as phase, size, dimension, and other structural features that affect the properties and functions of nanoparticles.

Mega library technology could also lead to discoveries in many critical areas of the future, like plastic upcycling and superconductors.

A tool that is more reliable over time

Megalibraries were created before machine learning tools could be trained on incomplete data from different sources. This limited their ability to predict and generalize. Megalibraries enable machine learning tools to do the best thing they can do — learn and become more intelligent over time. Mirkin stated that their model would improve at correctly predicting materials as it is fed higher-quality data under controlled conditions.

Montoya stated that creating an AI capability means predicting the materials needed for every application. We can have more excellent predictive capabilities with more data we have. You start with one dataset to help you train AI. As it learns, it adds more data. It’s similar to taking a child from kindergarten to a Ph.D. Their combined knowledge and experience will ultimately determine how far they can go.

Now, the team uses this approach to identify critical catalysts for fueling clean energy, automotive, and chemical industries. Identifying new green motivations will allow the conversion of many waste products to practical matter, hydrogen production, carbon dioxide utilization, and the development of fuel cells. The output of catalysts could also be used to replace rare and expensive materials such as iridium, which produces green hydrogen and CO 2 reduction products.